Google recently introduced a new AI-generated search results overview tool, designed to streamline the process of finding information online. However, shortly after its launch, the tool has faced significant backlash for providing inaccurate and misleading information. This incident highlights the challenges and risks associated with integrating AI into search engines.

False AI Claims and Immediate Actions

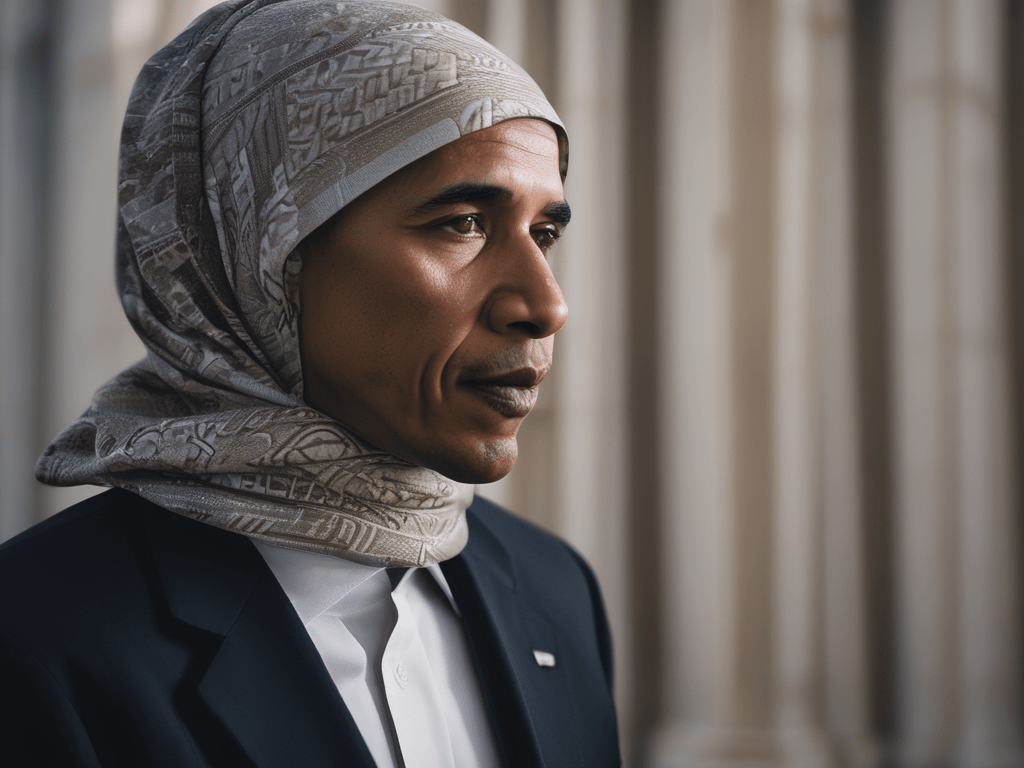

The AI search tool, part of Google’s broader push to incorporate its Gemini AI technology, aims to provide quick answers by summarizing search results. However, users quickly discovered that the tool was not infallible. For instance, it falsely claimed that former President Barack Obama is a Muslim, a common misconception. In reality, Obama is a Christian. Another erroneous AI summary stated that none of Africa’s 54 recognized countries start with the letter ‘K’, overlooking Kenya.

In response to these errors, Google swiftly removed the AI overviews for these queries, citing violations of company policies. Colette Garcia, a Google spokesperson, emphasized that most AI overviews deliver high-quality information, but acknowledged the need for continual improvement and swift corrective action when mistakes are identified.

The Experimental Nature of Generative AI

Google’s AI search overview acknowledges that “generative AI is experimental,” highlighting the inherent risks in using AI for information retrieval. Despite extensive testing intended to mimic potential bad actors and prevent false results, some errors still slipped through. These incidents underscore the experimental status of the technology and the challenges of ensuring accuracy.

Broader Implications and Public Response

The false claims have sparked concerns about the reliability of AI-generated information. Google, which has built its reputation as a trusted source for online searches, risks damaging its credibility with these AI missteps. This is particularly critical as Google competes with other tech giants like OpenAI and Meta in the rapidly advancing AI field.

Additional Examples of AI Errors

Beyond the high-profile misinformation, Google’s AI has also provided confusing or incorrect responses to less serious queries. For example, when asked about the sodium content in pickle juice, the AI gave contradictory information, vastly differing from actual product labels. In another case, when queried about the data used for training Google’s AI, the tool admitted uncertainty about the inclusion of copyrighted materials, a major concern in the AI industry.

Historical Context and Ongoing Challenges

This is not the first time Google has had to retract or adjust its AI capabilities due to inaccuracies. In February, the company paused its AI photo generator after it produced historically inaccurate images, predominantly showing people of color in place of White individuals. Such incidents highlight the ongoing challenges in perfecting AI tools.

User Control and Future Directions

Google’s Search Labs webpage allows users to toggle the AI search overview feature on and off, providing some control over the use of this experimental tool. As Google continues to refine its AI technologies, the company must balance innovation with reliability to maintain user trust.

The recent errors in Google’s AI-generated search overviews underscore the challenges and risks of integrating AI into everyday tools. While the technology holds promise for streamlining information retrieval, ensuring accuracy and reliability is paramount. Google’s swift response to these issues shows a commitment to improvement, but the incidents also highlight the need for ongoing vigilance and refinement in AI applications.

Leave a comment